White Paper

Accelerating Monte Carlo Simulations for Faster Statistical Variation Analysis, Debugging, and Signoff of Circuit Functionality

Spectre Fast Monte Carlo (FMC) Analysis

Over the years, semiconductor process nodes have been scaled aggressively, with device dimensions now approaching below 5nm. This, along with lower device operating voltages and currents, has allowed modern integrated circuits (ICs) and system-on-chip (SoC) designs to integrate more devices in a smaller chip area without compromising on lower power consumption and optimal performance. However, a consequence of aggressive process scaling combined with large-volume manufacturing is that ensuring a very low probability of failed ICs has become extremely difficult.

Incorporating variability in the form of manufacturing variations in the early stages of IC design is one of the most significant design challenges for modern ICs on advanced nodes. This is necessary to ensure a lower probability of an IC failure. Monte Carlo (MC) simulations are an integral part of such methodologies; however, MC simulations require significant computing resources, and this is especially true for blocks that have a low probability of failure but are replicated many times in a design.

Even with the increased performance of simulation tools, such as the Cadence Spectre X Simulator, and the availability of large-scale computing resources (more cores and cloud computing), it is impractical, and in many cases, impossible, to perform these computationally intensive MC simulations. It is especially true when the goal is to perform a high-sigma analysis where the number of MC simulations needed can exceed a billion runs, or even when the number of simulations is small, but each simulation itself is expensive. As a result, EDA solutions using state-of-the-art simulator technologies and methodologies that enable fast (minimum number of simulations) and accurate High-Sigma analysis are essential.

Spectre FMC Analysis is integrated into the well-known Spectre Simulation Platform, widely considered the gold standard for high-performance SPICE-accurate circuit simulation. The machine learning (ML)-based Spectre FMC Analysis facilitates any kind of high-sigma analysis on vast sample spaces practical, accurate, and reliable for circuit yield verification before tapeout. In this paper, we demonstrate only the Spectre FMC Analysis’ worst samples estimation method, using multiple case studies covering different types of circuits. The case studies involve high-sigma analysis applications on memory bit-cells, flip-flops, and analog IP in advanced-process nodes that are more representative of the analysis problems encountered in modern chip designs today.

Overview

Introduction

Modern SoCs today have diverse building blocks, ranging from memories to standard cells (such as flip-flops, multiplexers, and I/O cells), and analog blocks (including PLLs, ADCs, power management blocks, and RF components). Incorporating variability as a design factor in nanometer technologies is critical in the early stages of such complex SoC designs at the chip level and also at the block level. This is because random process variations can lead to a large variation in the basic functionality of the smallest building blocks, such as SRAM bit-cells or flip-flops in modern designs.

These variations lead to a higher probability of IC failures in large-volume production, resulting in poor yield and performance. For example, random dopant fluctuations (RDF), line edge roughness (LER), and variations in gate oxide thickness are a few of the well-known sources of variations that affect SRAM bit-cell characteristics. The large sensitivity of SRAM bit-cell characteristics (read or write operation) to these random process variations can lead to a whole memory block failing, which in turn can cause an SoC operational failure. Such failures can also occur from other sensitive blocks like ADCs, DACs, PLLs, or even power management circuit blocks, although they might not be in high densities as compared to memories and flip-flops.

Over the years, process design kits (PDKs) provided by foundries have been able to capture local and global transistor device-level physical process parameter variations as sufficiently accurate statistical models. These statistical models are then used across the Spectre Simulation Platform to perform statistical simulations and estimate the probability of a design failure.

With semiconductor technology node advancements, a higher component multiplicity is expected while integrating more transistors in a smaller area. However, there is no benefit from higher density integration without ensuring a good manufacturing yield. For example, with the increase in the number of memory and logic design blocks, even a very small probability of failure—such as one failure in a billion, often referred to as six-sigma yield—would have a significant impact on yield. For estimating the yield, an IC designer must either run a billion or more MC samples, which is computationally expensive and, to a large extent, impractical, or adopt alternative strategies.

Even for blocks with a low component multiplicity expected in a chip, the number of Monte Carlo simulations needed to lower the uncertainty of a failure from even 5000 samples is expensive due to the large simulation time of the block itself. Therefore, a faster Monte Carlo analysis-based solution is required for low-sigma and high-sigma analysis to ensure lower uncertainty of an IC failure before meeting tapeout activities, mass production deadlines, and quick time-to-market schedules.

Accelerating Monte Carlo Simulations

Let us consider SRAM blocks as an example, as they are some of the most common blocks in today’s SoCs for a wide variety of memory applications. In SRAM designs, memory bit-cells are arranged in a row-column fashion in large densities along with sense amplifier blocks to achieve high-density memory blocks. These types of memory designs, along with dense digital logic paths containing large numbers of flip-flops, are more sensitive to advanced node process variations. The sensitivity to these variations is such that even with a very low probability of a functional failure occurring, it can still lead to poor yield and performance as these circuits are used in modern chip design in large densities. Finding this small probability of failure in a large sample space is important to ensure high yield and performance. As an example, for SRAMs, the possible bit-cell failure characteristics that are well-studied and analyzed by design experts include the following:

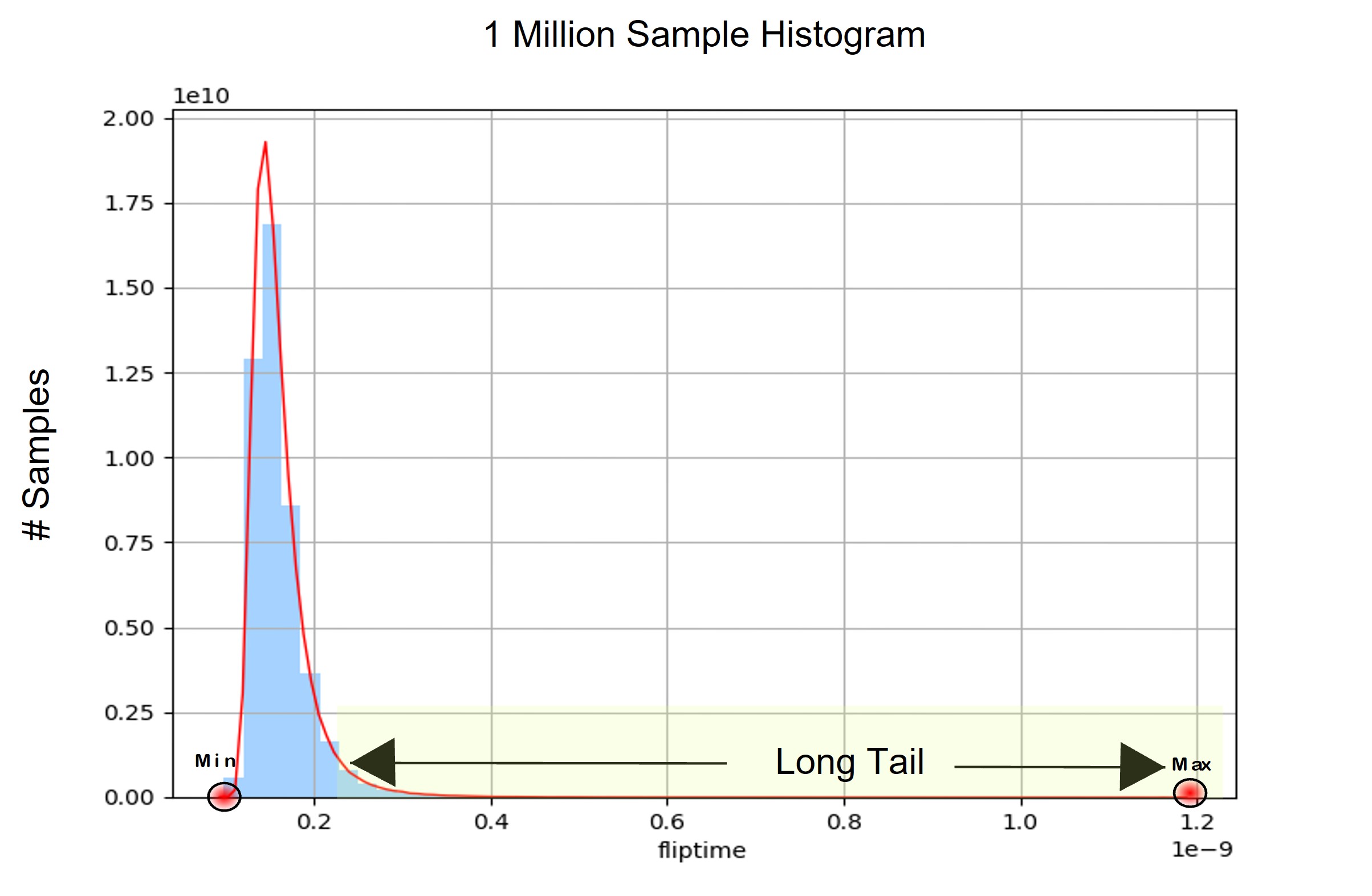

The term sigma defines the required yield value in an easily interpretable manner. One failure in a billion, or a yield value corresponding to 99.99999980%, represents a six-sigma yield. Today, for SRAM designs targeted to advanced-process nodes to achieve a high yield, the bit-cell failure probability must be very low—in the range of 10⁻⁶ to 10-12, that is, 4.5σ to 6.5σ. Effectively, this means that to characterize at high sigma, simulations between one million and a billion would be required to simulate the entire distribution and find the worst-case measurement results in the tails of the distribution. Figure 1 shows an example of such a histogram distribution from 1 million MC simulations.

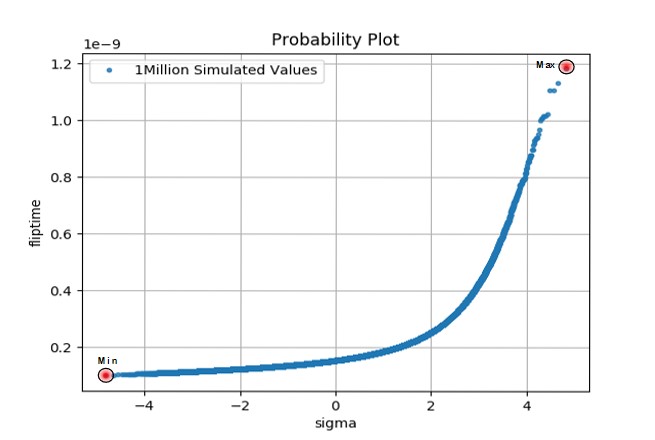

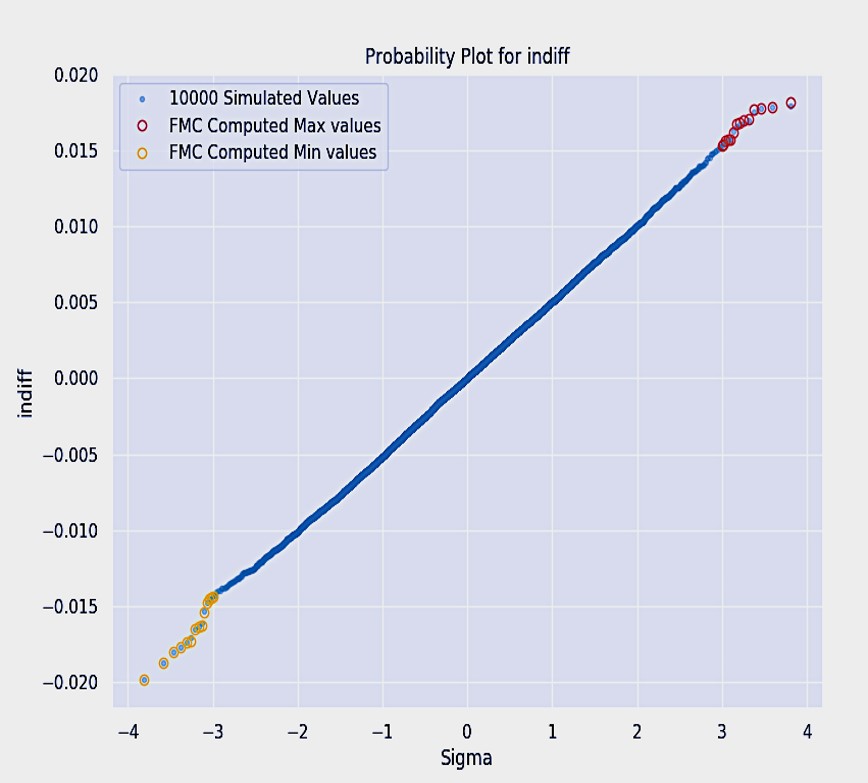

Figure 1 shows the distribution for a measurement from the memory bit-cell checking the write operation stability or write margin. This measurement measures a memory bit-cell flip time from world line (WL) rising 50% to a bit-cell internal node, which is rising to 70%. A write failure may also happen if the WL pulse is not long enough for the bit-cell to flip the internal nodes. The write margin decreases as sigma increases, which is the worst-case for a write operation. Figure 1 also shows the typical non-Gaussian bell curve, which is seen based on the type of design and advanced technology node used and the nature of how the total number of statistical variables varies in a high dimensional design space. The minimum and maximum tail points, along with the non-normal behavior of the distribution, can be seen more clearly using normal quantile plots, as shown in Figure 2.

Performing more than one million simulations to find the high-sigma tails in a design with a large number of standard cells or memory bit-cells, or even in digital timing critical paths, is not a productive approach, even with the latest performance improvements available in the Spectre X Simulator and distributed processing. As an alternative, Spectre FMC Analysis integrated into the Spectre Simulation Platform can be used to accurately identify the high-sigma worst-case tail values of interest with the minimum number of simulations. The next section covers this in detail, with case studies following.

Spectre FMC Analysis for Worst Samples Estimation

There are several methods and point tools available for estimating high-sigma tails from a high-dimensional design space involving many statistical process variables. Each method and tool have advantages, disadvantages, and applicability to specific applications. In this paper, we give an overview of the sample reordering technique along with the ML technology that has been integrated into the Spectre Simulation Platform.

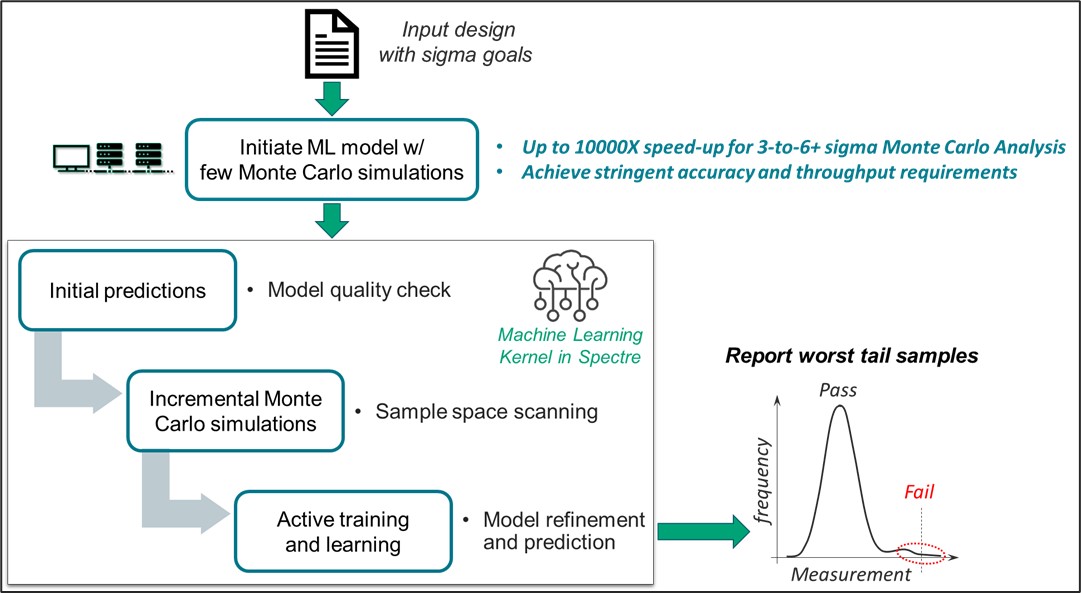

Figure 3 shows a very high-level abstraction of what is inside Spectre FMC Analysis for fast worst samples estimation. Spectre FMC Analysis is built on top of the industry-proven Spectre Monte Carlo (MC) engine. The worst samples estimation method in Spectre FMC Analysis is driven by the following parameters:

Based on the above parameters, Spectre FMC Analysis starts in the following manner:

- A few random MC simulations are performed to build a mathematical response surface model (RSM) of the output as a function of inputs using an ML kernel in the Spectre environment. This RSM model is used for initial predictions of the entire sample space of interest.

- Using the model predictions, a sample reordering is performed, which orders the MC samples from worst to best using model predictions of the measurement values specified as goals.

- Model quality checks occur by simulating a certain number of the predicted worst iterations.

- Upon simulating predicted worst iterations, an estimate of predictive model error, as well as the quality of sample reordering is obtained. This information is used to update the earlier built RSM, followed by again predicting the values for the entire sample space of interest.

- Multiple intelligent mechanisms drive the stopping conditions in Spectre FMC Analysis for worst samples estimation and are kept as a trade secret.

- The end result summarizes the worst samples for the measurement-specific goal in the form of a sigma, the exact MC iteration number, and value, allowing further debugging.

ML-based active training, learning, prediction, sample reordering, and model refinement are continuously occurring in an iterative manner until certain desired stopping conditions are met. The target value specified by the user for measurement goals is utilized to label a simulated value as a pass or fail. For example, goal delay max 10ps, would indicate that during the predictions any evaluated delay measurement exceeding 10ps is a functionality failure and influences the estimation of the worst tail samples from the distribution corresponding to this goal.

Distributed processing is leveraged in the technology to enable vast sample space scanning, model evaluations, and simulations to occur concurrently for achieving faster turnaround times and compatibility with compute farm and cloud computing environments.

Worst Samples Estimation Case Studies

The following case studies show the effectiveness of the Spectre FMC Analysis worst samples estimation method.

Bit-Cell Design

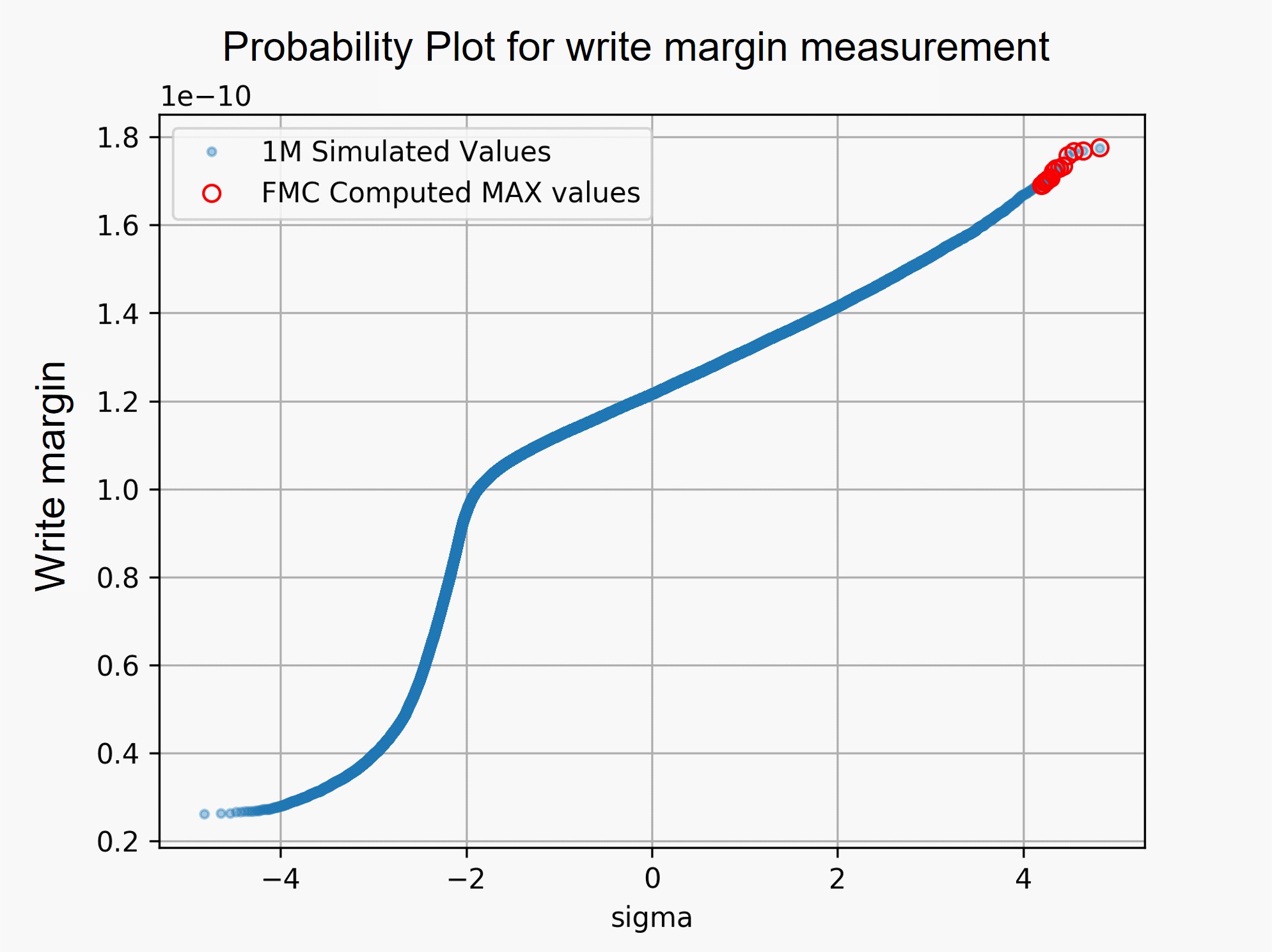

A bit-cell array design using statistical design models from a sub-10nm PDK was tested using the traditional MC simulation flow with the Spectre X Simulator and Spectre FMC Analysis. The goal was to identify the measurement values close to 4.5σ from a sample space of 1 million MC samples. Using the Spectre X Simulator on this bit-cell array with a circuit inventory of 8,000 transistors and <1,000 statistical parameters, 1 million brute-force MC simulations were done to find the minimum and maximum worst-case values of the measurement.

Using Spectre FMC Analysis, we were able to accurately identify the high-sigma min and max tail ends in less than five minutes with just 746 simulations. Figure 4 shows the correlation between brute-force MC and FMC simulations in the form of a probability plot. This probability plot depicts the 1 million simulated MC samples with blue dots, where each dot is the measurement value obtained from MC simulations. The red circles depict the high-sigma values identified by Spectre FMC Analysis, which overlap with the MC simulated values and hence are accurate with a speedup of 1340X. Also, note the non-linear nature of the measurement in Figure 4 captured by the probability plot. It is evident that the ML kernel in Spectre FMC Analysis is taking care of such non-linearity as expected in circuit measurement distributions.

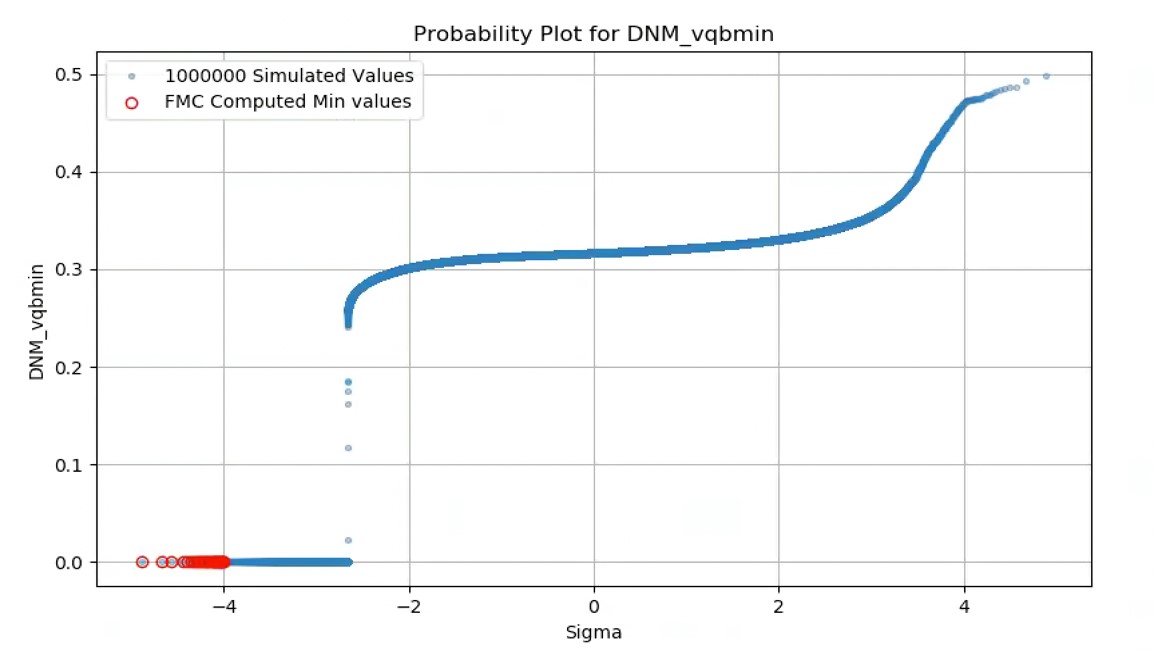

A discontinuous type of distribution also can be expected from circuit functionalities while operating in different modes or during the occurrence of failures. An example is shown in Figure 5, where a measurement from another sub-7nm bit cell design with 100 statistical variables was tested with Spectre FMC Analysis’ worst samples estimation method. The goal was to identify the possibility of core bit cell voltage dropping below 0.3V from a sample space of 1 million MC samples. With just 2500 simulations, Spectre FMC Analysis was able to identify the worst samples from 4.0σ to 4.9σ accurately with a 400X speedup.

From both of the examples using bit cells, we see that:

Flip-Flop Design

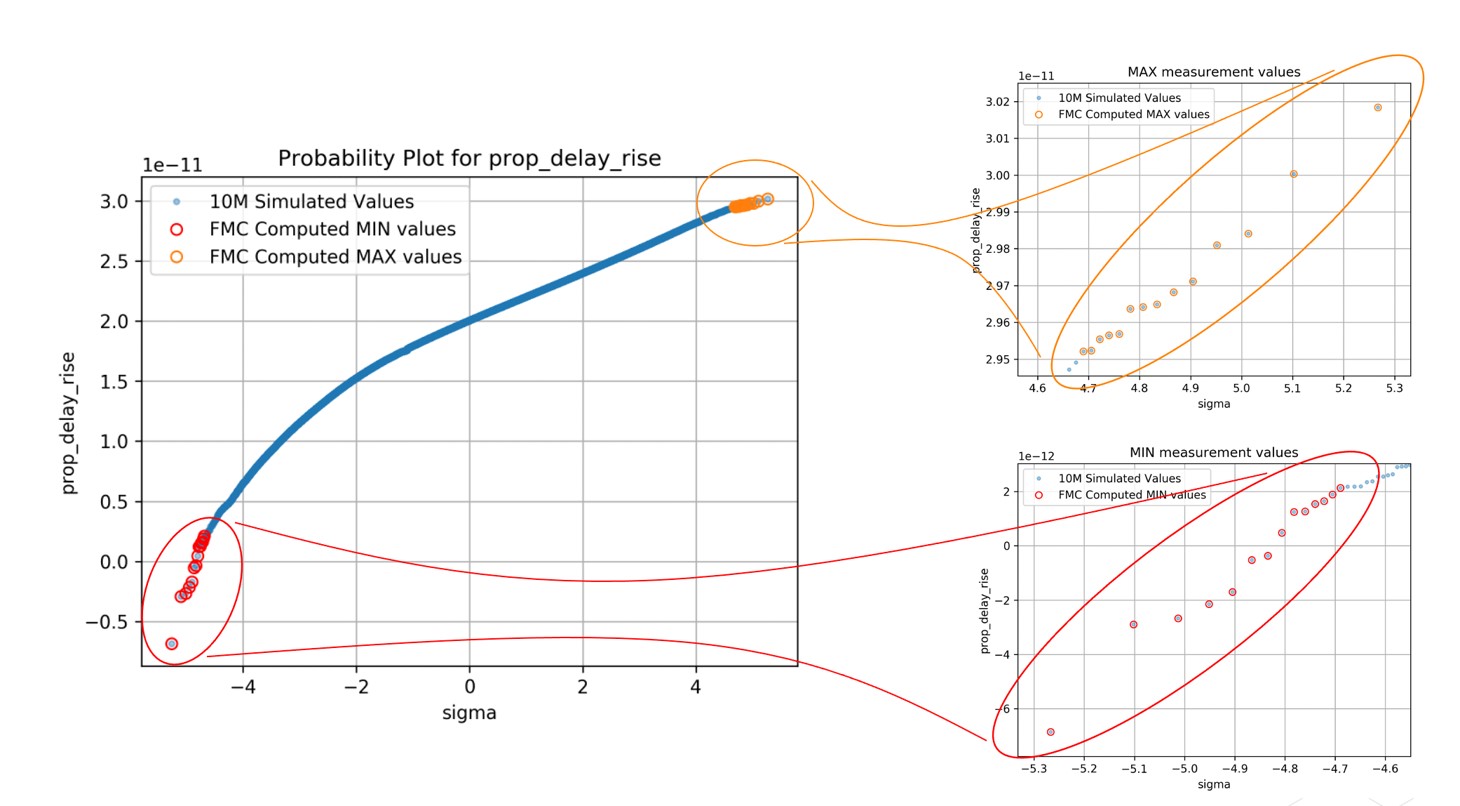

A multi-bit flip-flop design using statistical design models from a sub-5nm PDK was tested using the traditional MC simulation flow with the Spectre X Simulator and Spectre FMC Analysis. The goal was to find the high-sigma worst-case values for a delay-based measurement close to 4.9σ from a sample space of 10 million MC samples.

Using the Spectre X Simulator, 10 million brute-force MC simulations were performed on the flip-flop design, which had 460 transistors and 3000 statistical parameters. From these MC simulations, the minimum and maximum worst-case values of the delay measurement were identified. With just 1,064 simulations in less than three minutes, Spectre FMC Analysis was able to accurately identify the high-sigma min and max tail ends.

The reduction achieved with Spectre FMC Analysis, in the actual number of simulations required to identify high-sigma tail ends, was 10,000X. The probability plot of this test, along with a zoomed view showing excellent correlation between the brute-force and FMC values, is shown in Figure 6.

Timing Path

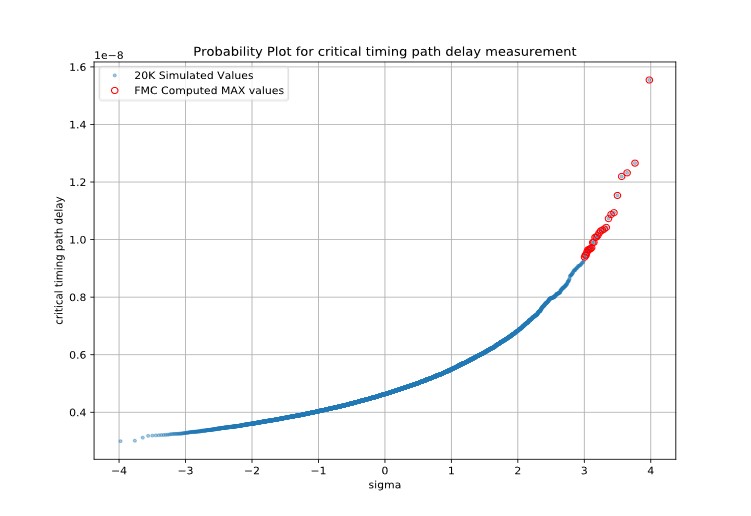

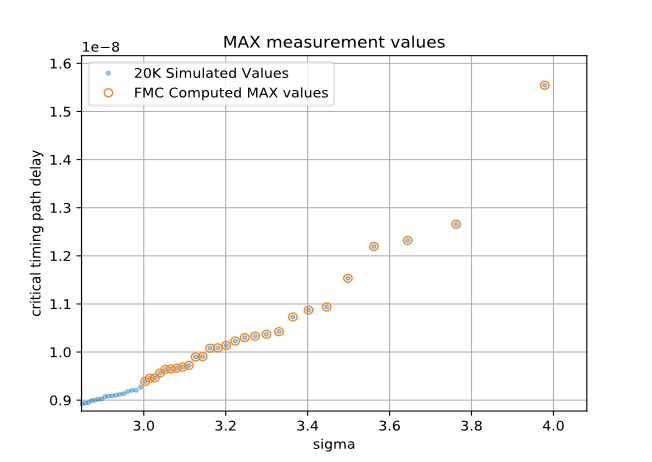

A critical-path SPICE netlist, created by the Cadence Tempus Timing Solution using statistical design models from a sub-14nm PDK, was tested using the traditional MC simulation flow with the Spectre X Simulator and Spectre FMC Analysis. The goal was to find all the worst-case values between 3σ and 4σ with no more than 20,000 MC simulations. This extracted critical timing path netlist design had a circuit inventory of 4600 transistors and a total of 3665 statistical parameters. 20,000 brute-force simulations were done to sort the worst-case critical timing path values.

Next, Spectre FMC Analysis was used on the same design, and with just 1,182 simulations, all the high-sigma values between 3σ and 4σ were accurately identified. The reduction achieved with Spectre FMC Analysis, in the actual number of simulations required to identify high-sigma tail ends in this case, is 17X. The probability plot of this test is shown in Figure 7.

ADC Design

A 14nm FinFET-based analog-to-digital converter (ADC) design was tested using the traditional MC simulation flow with the Spectre X Simulator and Spectre FMC Analysis. The goal was to find the high-sigma worst-case values for an input offset voltage measurement close to 3.9σ from a sample space of 10,000 MC samples.

Using the Spectre X Simulator, 10,000 brute-force MC simulations were performed on the ADC design, which had 5000 transistors, 4000 circuit nodes, and close to 45,000 statistical parameters. From these MC simulations, the minimum and maximum worst-case values of the input offset voltage measurement were identified. With just 900 simulations, Spectre FMC Analysis was able to accurately identify the high-sigma min and max tail ends as shown in Figure 8. The reduction achieved with Spectre FMC Analysis, in the actual number of simulations required to identify high-sigma tail ends, was 10X.

Easy Debugging of a Failure and Moments

Since Spectre FMC Analysis is built on Spectre MC analysis, the samples simulated in Spectre FMC Analysis generate consistent results when simulated with the standard MC analysis. This simplifies the debugging process and allows standard Spectre MC analysis features to be used. For example, if Spectre FMC Analysis identified the worst two samples as 1,201 and 99,999 for the design under test (DUT), the following MC analysis will repeat those specific samples or dump the actual process and mismatch parameters associated with these MC samples:

mc1 montecarlo seed=12345 runpoints=[ 1201 99999 ] … {

tr1 tran …

}

The biggest advantage is that you do not need to change the existing netlist-driven flows involving a large number of batch simulations using the Spectre Simulation Platform. With minimal netlist option additions, the existing netlists taken through traditional MC flows can be migrated to Spectre FMC Analysis very easily for high-yield verification or finding high-sigma worst-case samples of interest.

The five moments describing the characteristics of the measurement distribution from the DUT can be estimated with Spectre FMC Analysis. These include the mean, variance, standard deviation, skewness, and kurtosis information as a part of the Spectre FMC Analysis worst samples estimation report.

Conclusion

In this paper, we have seen that the Spectre FMC Analysis-based worst samples estimation method has the following advantages: