White Paper

Moving to the Next Level of Autonomous Driving Through Advanced, Extensible DSPs

In little more than a century, road vehicles have changed immeasurably. Just like those early adopters, we probably can’t imagine what vehicles will look like in another 100 years; however, we do have a good idea of what they will offer within a decade. Autonomy is expected to revolutionize vehicles of all types in stages, within the next 5 to 10 years.

As the industry progresses through the six levels of driving automation, each step requires increased processing capabilities inside the car. Right now, intelligence in the internet of things (IoT) is moving closer to the network edge; we can expect the same to happen in the automotive industry. The sensors needed to enable ADAS and autonomy require higher resolution and need to process more data, more quickly, and with less latency. The only way this can be done, really, is to put more processing capability as close to the sensor as possible, within the future zonal architectures of cars.

Overview

Introduction

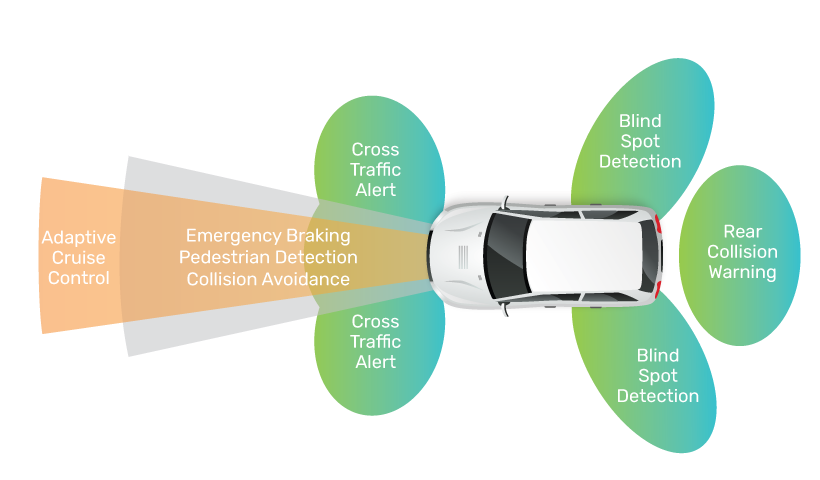

If vehicles are to become more autonomous, they need to understand the surrounding environment. Sensors provide that capability. The three key sensor modalities are radar, lidar and vision. Each has its relative strengths and weaknesses, which means all three will need to operate together. Combining multiple different sensor technologies in a sensor fusion system provides true redundancy for functional safety and improves sensing accuracy. The fourth element that will enable each element in the system to interoperate is connectivity. Together, these four elements provide the foundation for the era of autonomous vehicles.

Meeting the Need for ASIL-Certified IP

Autonomous vehicles feature multiple sensors that conform to one of three main types. Radar is expected to feature strongly, with 20 or more individual sensors distributed around a vehicle. As the technology best suited to providing high-resolution ranging data, lidar sensors will play a key role in autonomous driving. As the most mature technology, at least in terms of its use in automotive applications, image sensors will proliferate in autonomous vehicles. All three will be used in multiple ways, such as monitoring the terrain, other road users, pedestrians, and weather conditions, as well as absolute and relative speeds. Sensors will also be used to monitor the vehicle’s interior, for occupant detection and observation.

One challenge here is the amount of raw data generated by the sensors. It would be unfeasible to move that much data around a vehicle’s in-car network or relay it all to a cloud service for processing. The latency and bandwidth requirements would render autonomy impractical, which is why the raw data needs to be processed locally.

Another challenge relates to making the sensor solutions adaptable as the sensing imperative changes. For example, the range over which the sensor operates is speed dependent. Radar and lidar offer complementary capabilities in relation to resolution, but this, too, may be dependent on weather, light levels, and scene contrast.

However, this highlights other challenges. The engineering need for high-performance processing solutions is clear, but on a commercial level, those solutions can only be used if they are certified for use in an automotive application. They must also meet the processing requirements in an optimized way. Currently, the cost of lidar far exceeds the cost of either radar or image sensing, but the automotive industry accepts that lidar is necessary, because of its unique ability to meet specific sensing requirements.

This indicates the need for a scalable processing platform that can be applied to sensors of all types, optimized for different applications. The operation of automatic emergency braking is quite different from adaptive cruise control, but both may use a combination of lidar and radar sensors. Similarly, blind spot detection may use an image sensor during the day, but radar at night, or in bad weather conditions.

Each of the many use-cases needed to enable full autonomy requires a solution optimized for that purpose. However, with many tens of individual sensors required, OEMs need a way to commoditize what are effectively individual requirements. The answer is to standardize on a common but scalable processing platform, namely, the zonal architecture, including zonal controllers. These zonal controllers must be configurable to provide the right level of high-speed signal processing and the required safety level for various applications.

Functional Safety

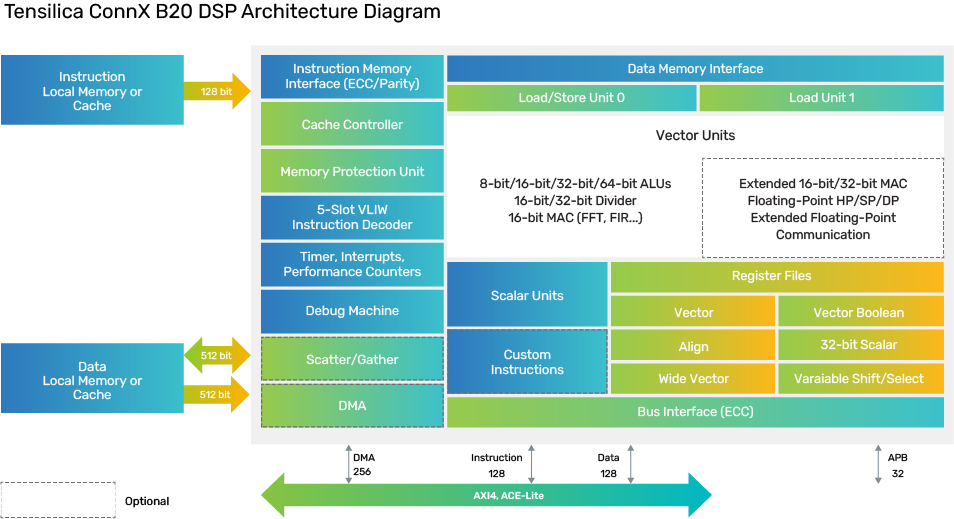

The Cadence TensilicaConnX B10 and B20 DSPs are optimized for next-generation sensing applications, including radar and lidar, as well as high-performance V2X (vehicle-to-everything) communications. They are also the semiconductor industry’s first dedicated radar, lidar, and V2X DSPs available as licensable IP to achieve Automotive Safety Integrity Level B random hardware fault and ASIL D systematic fault compliance. This level of functional safety certification to ISO 26262:2018 is essential for OEMs and Tier 1 suppliers developing SoCs targeting ADAS solutions and autonomous driving applications.

Advanced Processing for ADAS and Autonomous Driving

The ConnX B10 and B20 DSPs’ instruction set architecture (ISA) supports accelerators designed specifically for radar, lidar, and V2X. The scalability of the ConnX DSP family ensures that engineers can optimize their SoC design for the specific application, while benefiting from the common architecture to support code migration, reusability, and portability.

High Performance

The ConnX DSPs employ single instruction, multiple data (SIMD) vector processing based on a very long instruction word (VLIW) architecture. This supports parallel operations for load/store, MAC, and ALU. The ISA is optimized for linear algebra and complex data, as well as vector compression and expansion. The ISA helps enhance performance in various stages of sensor processing, such as Kalman filter, MUSIC, and DML processing.

Featuring the fastest clock speeds in the ConnX series, the ConnX B10 and B20 DSPs also offer an optional 32-bit vector fixed-point MAC that can be used to accelerate the processing functions associated with radar/lidar/V2X, including FIR, FFT, convolution, and correlation. Vector double, single, and half-precision floating point operations can also be hardware-accelerated.

The ConnX B10 and B20 DSPs have been optimized to provide the performance needed by advanced MIMO systems, including vector-based filtering, FFT, and linear algebra processing. The low-level processing requirements that these systems impose are also acknowledged, with an instruction set that supports a comprehensive level of bit-oriented operations.

The instruction set also includes optimized instructions for working on 16-bit data to perform complex arithmetic, polynomial evaluation, matrix multiplication, and square root/reciprocal acceleration. In addition, the ISA also supports 16- and 32-bit peak search acceleration.

Scalability

With an increasing number of antennas needed for higher resolution 4D imaging radar, and as physical layers (PHYs) transition to more advanced standards such as 5G, the processing performance requirement increases dramatically. The ConnX series meets this demand with its highly parallel and scalable architecture. The ConnX B10 and B20 DSPs also provide multi-core solutions to scale processing capacity beyond a single core.

Flexibility

Although DSPs are associated with the parallel execution of complex algorithms, the ConnX series does not overlook the need for hardware control. The ConnX DSPs also excel at running control code in areas such as the PHY application layer control. When interfacing with hardware blocks is required, ConnX DSPs may interface with the external hardware through dedicated custom interfaces, which deliver virtually unlimited data bandwidth.

Customizability

To further optimize the DSP IP, the ConnX series can also be customized using the Tensilica Instruction Extension (TIE) language. Similar to Verilog, this language can be used to design multi-cycle pipelined execution units, register files, SIMD ALUs, and more, all of which can be used to extend the core architecture. Each extension has its own dedicated instructions that become part of the ISA, allowing software developers to access hardware extensions with ease.

Autonomy Powered by Performance

The need for high-performance radar, lidar, image sensors, and communications is fundamental to autonomous driving. It is widely accepted that general-purpose CPUs are unable to deliver the performance needed, which is why technology leaders have been developing application-specific solutions for some time.

These architectures must deliver high performance with efficient memory utilization, while minimizing energy and cost. The ConnX B10 and B20 DSPs have been designed specifically for radar, lidar, and 5G, delivering 10 times more performance than their other software compatible ConnX family members. Features such as 32-bit fixed point MACs, double-precision, single-precision and half-precision floating-point, more parallelism, and a faster clock speed all come together in an extensible architecture. This architecture is specifically designed to address processing requirements throughout the radar/lidar sensor and V2X application compute chain, as well as meet the needs of automotive OEMs targeting Level 2 and above driving automation.

As the industry’s first extensible DSP IP optimized for radar, lidar, and V2X to receive ASIL B random fault and ASIL D systematic fault compliance certification, the ConnX B10 and B20 DSPs are helping to define this future. The automotive industry now has key technologies that are needed to enable greater levels of autonomy.

The contents of this white paper were first published in eeNews Europe, December 2020.